Digital look-alikes

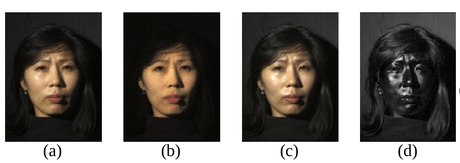

(a) Normal image in dot lighting

(b) Image of the diffuse reflection which is caught by placing a vertical polarizer in front of the light source and a horizontal in the front the camera

(c) Image of the highlight specular reflection which is caught by placing both polarizers vertically

(d) Subtraction of c from b, which yields the specular component

Images are scaled to seem to be the same luminosity.

Original image by Debevec et al. – Copyright ACM 2000 – http://dl.acm.org/citation.cfm?doid=311779.344855 – Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page.

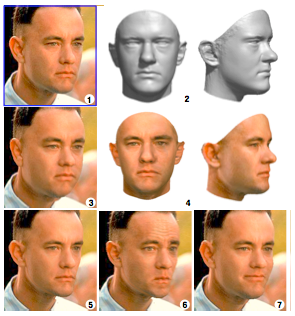

(1) Sculpting a morphable model to one single picture

(2) Produces 3D approximation

(4) Texture capture

(3) The 3D model is rendered back to the image with weight gain

(5) With weight loss

(6) Looking annoyed

(7) Forced to smile Image 2 by Blanz and Vettel – Copyright ACM 1999 – http://dl.acm.org/citation.cfm? doid=311535.311556 – Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page.

When the camera does not exist, but the subject being imaged with a simulation of a (movie) camera deceives the watcher to believe it is some living or dead person it is a digital look-alike.

Introduction to digital look-alikes[edit | edit source]

In the cinemas we have seen digital look-alikes for over 15 years. These digital look-alikes have "clothing" (a simulation of clothing is not clothing) or "superhero costumes" and "superbaddie costumes", and they don't need to care about the laws of physics, let alone laws of physiology. It is generally accepted that digital look-alikes made their public debut in the sequels of The Matrix i.e. w:The Matrix Reloaded and w:The Matrix Revolutions released in 2003. It can be considered almost certain, that it was not possible to make these before the year 1999, as the final piece of the puzzle to make a (still) digital look-alike that passes human testing, the reflectance capture over the human face, was made for the first time in 1999 at the w:University of Southern California and was presented to the crème de la crème of the computer graphics field in their annual gathering SIGGRAPH 2000.[1]

“Do you think that was Hugo Weaving's left cheekbone that Keanu Reeves punched in with his right fist?”

The problems with digital look-alikes[edit | edit source]

Extremely unfortunately for the humankind, organized criminal leagues, that posses the weapons capability of making believable looking synthetic pornography, are producing on industrial production pipelines synthetic terror porn[footnote 1] by animating digital look-alikes and distributing it in the murky Internet in exchange for money stacks that are getting thinner and thinner as time goes by.

These industrially produced pornographic delusions are causing great humane suffering, especially in their direct victims, but they are also tearing our communities and societies apart, sowing blind rage, perceptions of deepening chaos, feelings of powerlessness and provoke violence. This hate illustration increases and strengthens hate thinking, hate speech, hate crimes and tears our fragile social constructions apart and with time perverts humankind's view of humankind into an almost unrecognizable shape, unless we interfere with resolve.

For these reasons the bannable raw materials i.e. covert models, needed to produce this disinformation terror on the information-industrial production pipelines, should be prohibited by law in order to protect humans from arbitrary abuse by criminal parties.

See also in Ban Covert Modeling! wiki[edit | edit source]

Footnotes[edit | edit source]

- ↑ It is terminologically more precise, more inclusive and more useful to talk about 'synthetic terror porn', if we want to talk about things with their real names, than 'synthetic rape porn', because also synthesizing recordings of consentual looking sex scenes can be terroristic in intent.

References[edit | edit source]

- ↑ Debevec, Paul (2000). "Acquiring the reflectance field of a human face". Proceedings of the 27th annual conference on Computer graphics and interactive techniques - SIGGRAPH '00. ACM. pp. 145–156. doi:10.1145/344779.344855. ISBN 978-1581132083. Retrieved 2017-05-24.

Transcluded Wikipedia articles[edit | edit source]

Human image synthesis article transcluded from Wikipedia[edit | edit source]

Human image synthesis is technology that can be applied to make believable and even photorealistic renditions[1][2] of human-likenesses, moving or still. It has effectively existed since the early 2000s. Many films using computer generated imagery have featured synthetic images of human-like characters digitally composited onto the real or other simulated film material. Towards the end of the 2010s deep learning artificial intelligence has been applied to synthesize images and video that look like humans, without need for human assistance, once the training phase has been completed, whereas the old school 7D-route required massive amounts of human work .

Timeline of human image synthesis

- In 1971 Henri Gouraud made the first CG geometry capture and representation of a human face. Modeling was his wife Sylvie Gouraud. The 3D model was a simple wire-frame model and he applied the Gouraud shader he is most known for to produce the first known representation of human-likeness on computer.[3][4]

- The 1972 short film A Computer Animated Hand by Edwin Catmull and Fred Parke was the first time that computer-generated imagery was used in film to simulate moving human appearance. The film featured a computer simulated hand and face (watch film here).

- The 1976 film Futureworld reused parts of A Computer Animated Hand on the big screen.

- The 1983 music video for song Musique Non-Stop by German band Kraftwerk aired in 1986. Created by the artist Rebecca Allen, it features non-realistic looking, but clearly recognizable computer simulations of the band members.

- The 1994 film The Crow was the first film production to make use of digital compositing of a computer simulated representation of a face onto scenes filmed using a body double. Necessity was the muse as the actor Brandon Lee portraying the protagonist was tragically killed accidentally on-stage.

- In 1999 Paul Debevec et al. of USC captured the reflectance field of a human face with their first version of a light stage. They presented their method at the SIGGRAPH 2000[5]

- In 2003 audience debut of photo realistic human-likenesses in the 2003 films The Matrix Reloaded in the burly brawl sequence where up-to-100 Agent Smiths fight Neo and in The Matrix Revolutions where at the start of the end showdown Agent Smith's cheekbone gets punched in by Neo leaving the digital look-alike unnaturally unhurt. The Matrix Revolutions bonus DVD documents and depicts the process in some detail and the techniques used, including facial motion capture and limbal motion capture, and projection onto models.

- In 2003 The Animatrix: Final Flight of the Osiris a state-of-the-art want-to-be human likenesses not quite fooling the watcher made by Square Pictures.

- In 2003 digital likeness of Tobey Maguire was made for movies Spider-man 2 and Spider-man 3 by Sony Pictures Imageworks.[6]

- In 2005 the Face of the Future project was an established.[7] by the University of St Andrews and Perception Lab, funded by the EPSRC.[8] The website contains a "Face Transformer", which enables users to transform their face into any ethnicity and age as well as the ability to transform their face into a painting (in the style of either Sandro Botticelli or Amedeo Modigliani).[9] This process is achieved by combining the user's photograph with an average face.[8]

- In 2009 Debevec et al. presented new digital likenesses, made by Image Metrics, this time of actress Emily O'Brien whose reflectance was captured with the USC light stage 5[10] Motion looks fairly convincing contrasted to the clunky run in the Animatrix: Final Flight of the Osiris which was state-of-the-art in 2003 if photorealism was the intention of the animators.

- In 2009 a digital look-alike of a younger Arnold Schwarzenegger was made for the movie Terminator Salvation though the end result was critiqued as unconvincing. Facial geometry was acquired from a 1984 mold of Schwarzenegger.

- In 2010 Walt Disney Pictures released a sci-fi sequel entitled Tron: Legacy with a digitally rejuvenated digital look-alike of actor Jeff Bridges playing the antagonist CLU.

- In SIGGGRAPH 2013 Activision and USC presented a real-time "Digital Ira" a digital face look-alike of Ari Shapiro, an ICT USC research scientist,[11] utilizing the USC light stage X by Ghosh et al. for both reflectance field and motion capture.[12] The end result both precomputed and real-time rendering with the modernest game GPU shown here and looks fairly realistic.

- In 2014 The Presidential Portrait by USC Institute for Creative Technologies in conjunction with the Smithsonian Institution was made using the latest USC mobile light stage wherein President Barack Obama had his geometry, textures and reflectance captured.[13]

- In 2014 Ian Goodfellow et al. presented the principles of a generative adversarial network. GANs made the headlines in early 2018 with the deepfakes controversies.

- For the 2015 film Furious 7 a digital look-alike of actor Paul Walker who died in an accident during the filming was done by Weta Digital to enable the completion of the film.[14]

- In 2016 techniques which allow near real-time counterfeiting of facial expressions in existing 2D video have been believably demonstrated.[15]

- In 2016 a digital look-alike of Peter Cushing was made for the Rogue One film where its appearance would appear to be of same age as the actor was during the filming of the original 1977 Star Wars film.

- In SIGGRAPH 2017 an audio driven digital look-alike of upper torso of Barack Obama was presented by researchers from University of Washington.[16] It was driven only by a voice track as source data for the animation after the training phase to acquire lip sync and wider facial information from training material consisting 2D videos with audio had been completed.[17]

- Late 2017[18] and early 2018 saw the surfacing of the deepfakes controversy where porn videos were doctored using deep machine learning so that the face of the actress was replaced by the software's opinion of what another persons face would look like in the same pose and lighting.

- In 2018 Game Developers Conference Epic Games and Tencent Games demonstrated "Siren", a digital look-alike of the actress Bingjie Jiang. It was made possible with the following technologies: CubicMotion's computer vision system, 3Lateral's facial rigging system and Vicon's motion capture system. The demonstration ran in near real time at 60 frames per second in the Unreal Engine 4.[19]

- In 2018 at the World Internet Conference in Wuzhen the Xinhua News Agency presented two digital look-alikes made to the resemblance of its real news anchors Qiu Hao (Chinese language)[20] and Zhang Zhao (English language). The digital look-alikes were made in conjunction with Sogou.[21] Neither the speech synthesis used nor the gesturing of the digital look-alike anchors were good enough to deceive the watcher to mistake them for real humans imaged with a TV camera.

- In September 2018 Google added "involuntary synthetic pornographic imagery" to its ban list, allowing anyone to request the search engine block results that falsely depict them as "nude or in a sexually explicit situation."[22]

- In February 2019 Nvidia open sources StyleGAN, a novel generative adversarial network.[23] Right after this Phillip Wang made the website ThisPersonDoesNotExist.com with StyleGAN to demonstrate that unlimited amounts of often photo-realistic looking facial portraits of no-one can be made automatically using a GAN.[24] Nvidia's StyleGAN was presented in a not yet peer reviewed paper in late 2018.[24]

- At the June 2019 CVPR the MIT CSAIL presented a system titled "Speech2Face: Learning the Face Behind a Voice" that synthesizes likely faces based on just a recording of a voice. It was trained with massive amounts of video of people speaking.

- Since 1 July 2019[25] Virginia has criminalized the sale and dissemination of unauthorized synthetic pornography, but not the manufacture.,[26] as § 18.2–386.2 titled 'Unlawful dissemination or sale of images of another; penalty.' became part of the Code of Virginia. The law text states: "Any person who, with the intent to coerce, harass, or intimidate, maliciously disseminates or sells any videographic or still image created by any means whatsoever that depicts another person who is totally nude, or in a state of undress so as to expose the genitals, pubic area, buttocks, or female breast, where such person knows or has reason to know that he is not licensed or authorized to disseminate or sell such videographic or still image is guilty of a Class 1 misdemeanor.".[26] The identical bills were House Bill 2678 presented by Delegate Marcus Simon to the Virginia House of Delegates on 14 January 2019 and three-day later an identical Senate bill 1736 was introduced to the Senate of Virginia by Senator Adam Ebbin.

- Since 1 September 2019 Texas senate bill SB 751 amendments to the election code came into effect, giving candidates in elections a 30-day protection period to the elections during which making and distributing digital look-alikes or synthetic fakes of the candidates is an offense. The law text defines the subject of the law as "a video, created with the intent to deceive, that appears to depict a real person performing an action that did not occur in reality"[27]

- In September 2019 Yle, the Finnish public broadcasting company, aired a result of experimental journalism, a deepfake of the President in office Sauli Niinistö in its main news broadcast for the purpose of highlighting the advancing disinformation technology and problems that arise from it.

- 1 January 2020[28] California the state law AB-602 came into effect banning the manufacturing and distribution of synthetic pornography without the consent of the people depicted. AB-602 provides victims of synthetic pornography with injunctive relief and poses legal threats of statutory and punitive damages on criminals making or distributing synthetic pornography without consent. The bill AB-602 was signed into law by California Governor Gavin Newsom on 3 October 2019 and was authored by California State Assembly member Marc Berman.[29]

- 1 January 2020, Chinese law requiring that synthetically faked footage should bear a clear notice about its fakeness came into effect. Failure to comply could be considered a crime the Cyberspace Administration of China stated on its website. China announced this new law in November 2019.[30] The Chinese government seems to be reserving the right to prosecute both users and online video platforms failing to abide by the rules.[31]

Key breakthrough to photorealism: reflectance capture

In 1999 Paul Debevec et al. of USC did the first known reflectance capture over the human face with their extremely simple light stage. They presented their method and results in SIGGRAPH 2000.[5]

The scientific breakthrough required finding the subsurface light component (the simulation models are glowing from within slightly) which can be found using knowledge that light that is reflected from the oil-to-air layer retains its polarization and the subsurface light loses its polarization. So equipped only with a movable light source, movable video camera, 2 polarizers and a computer program doing extremely simple math and the last piece required to reach photorealism was acquired.[5]

For a believable result both light reflected from skin (BRDF) and within the skin (a special case of BTDF) which together make up the BSDF must be captured and simulated.

Capturing

- The 3D geometry and textures are captured onto a 3D model by a 3D reconstruction method, such as sampling the target by means of 3D scanning with an RGB XYZ scanner such as Arius3d or Cyberware (textures from photos, not pure RGB XYZ scanner), stereophotogrammetrically from synchronized photos or even from enough repeated non-simultaneous photos. Digital sculpting can be used to make up models of the body parts for which data cannot be acquired e.g. parts of the body covered by clothing.

- For believable results also the reflectance field must be captured or an approximation must be picked from the libraries to form a 7D reflectance model of the target.

Synthesis

The whole process of making digital look-alikes i.e. characters so lifelike and realistic that they can be passed off as pictures of humans is a very complex task as it requires photorealistically modeling, animating, cross-mapping, and rendering the soft body dynamics of the human appearance.

Synthesis with an actor and suitable algorithms is applied using powerful computers. The actor's part in the synthesis is to take care of mimicking human expressions in still picture synthesizing and also human movement in motion picture synthesizing. Algorithms are needed to simulate laws of physics and physiology and to map the models and their appearance, movements and interaction accordingly.

Often both physics/physiology based (i.e. skeletal animation) and image-based modeling and rendering are employed in the synthesis part. Hybrid models employing both approaches have shown best results in realism and ease-of-use. Morph target animation reduces the workload by giving higher level control, where different facial expressions are defined as deformations of the model, which facial allows expressions to be tuned intuitively. Morph target animation can then morph the model between different defined facial expressions or body poses without much need for human intervention.

Using displacement mapping plays an important part in getting a realistic result with fine detail of skin such as pores and wrinkles as small as 100 μm.

Machine learning approach

In the late 2010s, machine learning, and more precisely generative adversarial networks (GAN), were used by NVIDIA to produce random yet photorealistic human-like portraits. The system, named StyleGAN, was trained on a database of 70,000 images from the images depository website Flickr. The source code was made public on GitHub in 2019.[32] Outputs of the generator network from random input were made publicly available on a number of websites.[33][34]

Similarly, since 2018, deepfake technology has allowed GANs to swap faces between actors; combined with the ability to fake voices, GANs can thus generate fake videos that seem convincing.[35]

Applications

Main applications fall within the domains of stock photography, synthetic datasets, virtual cinematography, computer and video games and covert disinformation attacks.[36][34] Some facial-recognition AI use images generated by other AI as synthetic data for training.[37]

Furthermore, some research suggests that it can have therapeutic effects as "psychologists and counselors have also begun using avatars to deliver therapy to clients who have phobias, a history of trauma, addictions, Asperger’s syndrome or social anxiety."[38] The strong memory imprint and brain activation effects caused by watching a digital look-alike avatar of yourself is dubbed the Doppelgänger effect.[38] The doppelgänger effect can heal when covert disinformation attack is exposed as such to the targets of the attack.

Related issues

The speech synthesis has been verging on being completely indistinguishable from a recording of a real human's voice since the 2016 introduction of the voice editing and generation software Adobe Voco, a prototype slated to be a part of the Adobe Creative Suite and DeepMind WaveNet, a prototype from Google.[39] Ability to steal and manipulate other peoples voices raises obvious ethical concerns. [40]

At the 2018 Conference on Neural Information Processing Systems (NeurIPS) researchers from Google presented the work 'Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis', which transfers learning from speaker verification to achieve text-to-speech synthesis, that can be made to sound almost like anybody from a speech sample of only 5 seconds (listen).[41]

Sourcing images for AI training raises a question of privacy as people who are used for training didn't consent.[42]

Digital sound-alikes technology found its way to the hands of criminals as in 2019 Symantec researchers knew of 3 cases where technology has been used for crime.[43][44]

This coupled with the fact that (as of 2016) techniques which allow near real-time counterfeiting of facial expressions in existing 2D video have been believably demonstrated increases the stress on the disinformation situation.[15]

See also

- Motion-capture acting

- Internet manipulation

- Media synthesis

- Propaganda techniques

- 3D data acquisition and object reconstruction

- 3D reconstruction from multiple images

- 3D pose estimation in general and articulated body pose estimation especially to do with capturing human likeness.

- 4D reconstruction

- Finger tracking

- Gesture recognition

- StyleGAN

References

- ^ Physics-based muscle model for mouth shape control on IEEE Explore (requires membership)

- ^ Realistic 3D facial animation in virtual space teleconferencing on IEEE Explore (requires membership)

- ^ Berlin, Isabelle (14 September 2008). "Images de synthèse : palme de la longévité pour l'ombrage de Gouraud". Interstices (in French). Retrieved 3 October 2024.

- ^ "Images de synthèse : palme de la longévité pour l'ombrage de Gouraud". 14 September 2008.

- ^ a b c Debevec, Paul (2000). "Acquiring the reflectance field of a human face". Proceedings of the 27th annual conference on Computer graphics and interactive techniques - SIGGRAPH '00. ACM. pp. 145–156. doi:10.1145/344779.344855. ISBN 978-1581132083. S2CID 2860203. Retrieved 24 May 2017.

- ^ Pighin, Frédéric. "Siggraph 2005 Digital Face Cloning Course Notes" (PDF). Retrieved 24 May 2017.

- ^ "St. Andrews Face Transformer". Futility Closet. 30 January 2005. Retrieved 7 December 2020.

- ^ a b West, Marc (4 December 2007). "Changing the face of science". Plus Magazine. Retrieved 7 December 2020.

- ^ Goddard, John (27 January 2010). "The many faces of race research". thestar.com. Retrieved 7 December 2020.

- ^ In this TED talk video at 00:04:59 you can see two clips, one with the real Emily shot with a real camera and one with a digital look-alike of Emily, shot with a simulation of a camera – Which is which is difficult to tell. Bruce Lawmen was scanned using USC light stage 6 in still position and also recorded running there on a treadmill. Many, many digital look-alikes of Bruce are seen running fluently and natural looking at the ending sequence of the TED talk video.

- ^ ReForm – Hollywood's Creating Digital Clones (youtube). The Creators Project. 24 May 2017.

- ^ Debevec, Paul. "Digital Ira SIGGRAPH 2013 Real-Time Live". Archived from the original on 21 February 2015. Retrieved 24 May 2017.

- ^ "Scanning and printing a 3D portrait of President Barack Obama". University of Southern California. 2013. Archived from the original on 17 September 2015. Retrieved 24 May 2017.

- ^ Giardina, Carolyn (25 March 2015). "'Furious 7' and How Peter Jackson's Weta Created Digital Paul Walker". The Hollywood Reporter. Retrieved 24 May 2017.

- ^ a b Thies, Justus (2016). "Face2Face: Real-time Face Capture and Reenactment of RGB Videos". Proc. Computer Vision and Pattern Recognition (CVPR), IEEE. Retrieved 24 May 2017.

- ^ "Synthesizing Obama: Learning Lip Sync from Audio". grail.cs.washington.edu. Retrieved 3 October 2024.

- ^ Suwajanakorn, Supasorn; Seitz, Steven; Kemelmacher-Shlizerman, Ira (2017), Synthesizing Obama: Learning Lip Sync from Audio, University of Washington, retrieved 2 March 2018

- ^ Roettgers, Janko (21 February 2018). "Porn Producers Offer to Help Hollywood Take Down Deepfake Videos". Variety. Retrieved 28 February 2018.

- ^ Takahashi, Dean (21 March 2018). "Epic Games shows off amazing real-time digital human with Siren demo". VentureBeat. Retrieved 10 September 2018.

- ^ Kuo, Lily (9 November 2018). "World's first AI news anchor unveiled in China". TheGuardian.com. Retrieved 9 November 2018.

- ^ Hamilton, Isobel Asher (9 November 2018). "China created what it claims is the first AI news anchor — watch it in action here". Business Insider. Retrieved 9 November 2018.

- ^

Harwell, Drew (30 December 2018). "Fake-porn videos are being weaponized to harass and humiliate women: 'Everybody is a potential target'". The Washington Post. Retrieved 14 March 2019.

In September [of 2018], Google added "involuntary synthetic pornographic imagery" to its ban list

- ^ "NVIDIA Open-Sources Hyper-Realistic Face Generator StyleGAN". Medium.com. 9 February 2019. Retrieved 3 October 2019.

- ^ a b Paez, Danny (13 February 2019). "This Person Does Not Exist Is the Best One-Off Website of 2019". Inverse. Retrieved 5 March 2018.

- ^ "New state laws go into effect July 1". 24 June 2019.

- ^ a b "§ 18.2–386.2. Unlawful dissemination or sale of images of another; penalty". Virginia. Retrieved 1 January 2020.

- ^

"Relating to the creation of a criminal offense for fabricating a deceptive video with intent to influence the outcome of an election". Texas. 14 June 2019. Retrieved 2 January 2020.

In this section, "deep fake video" means a video, created with the intent to deceive, that appears to depict a real person performing an action that did not occur in reality

- ^ Johnson, R.J. (30 December 2019). "Here Are the New California Laws Going Into Effect in 2020". KFI. iHeartMedia. Retrieved 1 January 2020.

- ^ Mihalcik, Carrie (4 October 2019). "California laws seek to crack down on deepfakes in politics and porn". cnet.com. CNET. Retrieved 14 October 2019.

- ^ "China seeks to root out fake news and deepfakes with new online content rules". Reuters.com. Reuters. 29 November 2019. Retrieved 8 December 2019.

- ^ Statt, Nick (29 November 2019). "China makes it a criminal offense to publish deepfakes or fake news without disclosure". The Verge. Retrieved 8 December 2019.

- ^ Synced (9 February 2019). "NVIDIA Open-Sources Hyper-Realistic Face Generator StyleGAN". Synced. Retrieved 4 August 2020.

- ^ StyleGAN public showcase website

- ^ a b Porter, Jon (20 September 2019). "100,000 free AI-generated headshots put stock photo companies on notice". The Verge. Retrieved 7 August 2020.

- ^ "What Is a Deepfake?". PCMAG.com. March 2020. Retrieved 8 June 2020.

- ^ Harwell, Drew. "Dating apps need women. Advertisers need diversity. AI companies offer a solution: Fake people". Washington Post. Retrieved 4 August 2020.

- ^ "Neural Networks Need Data to Learn. Even If It's Fake". Quanta Magazine. 11 December 2023. Retrieved 18 June 2023.

- ^ a b Murphy, Samantha (2023). "Scientific American: Your Avatar, Your Guide" (.pdf). Scientific American / Uni of Stanford. Retrieved 11 December 2023.

- ^ "WaveNet: A Generative Model for Raw Audio". Deepmind.com. 8 September 2016. Archived from the original on 27 May 2017. Retrieved 24 May 2017.

- ^ "Adobe Voco 'Photoshop-for-voice' causes concern". BBC.com. BBC. 7 November 2016. Retrieved 5 July 2016.

- ^ Jia, Ye; Zhang, Yu; Weiss, Ron J. (12 June 2018), "Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis", Advances in Neural Information Processing Systems, 31: 4485–4495, arXiv:1806.04558, Bibcode:2018arXiv180604558J

- ^ Rachel Metz (19 April 2019). "If your image is online, it might be training facial-recognition AI". CNN. Retrieved 4 August 2020.

- ^ "Fake voices 'help cyber-crooks steal cash'". bbc.com. BBC. 8 July 2019. Retrieved 16 April 2020.

- ^ Drew, Harwell (16 April 2020). "An artificial-intelligence first: Voice-mimicking software reportedly used in a major theft". Washington Post. Retrieved 8 September 2019.